Prerit Gupta

Ph.D. Student in Computer Science

Purdue University

Ph.D. Student in Computer Science

Purdue University

I am a Ph.D. student in Computer Science at Purdue University, working in the IDEAS Lab under the supervision of Prof. Aniket Bera. My research lies at the intersection of interactive human motion graphics and multimodal generative AI.

My current work explores how multimodal learning frameworks, combining motion, text and music, can enable better contextualized and controlled generation. This includes building datasets and models for interactive and reactive motion generation, and context-sensitive human–AI interaction in immersive environments such as VR. Some of the upcoming projects I have been working on are related to exploring motion editing and long-term generation for two-person generation.

Previously, I earned my B.Tech and M.Tech in Electronics and Electrical Communications Engineering from IIT Kharagpur.

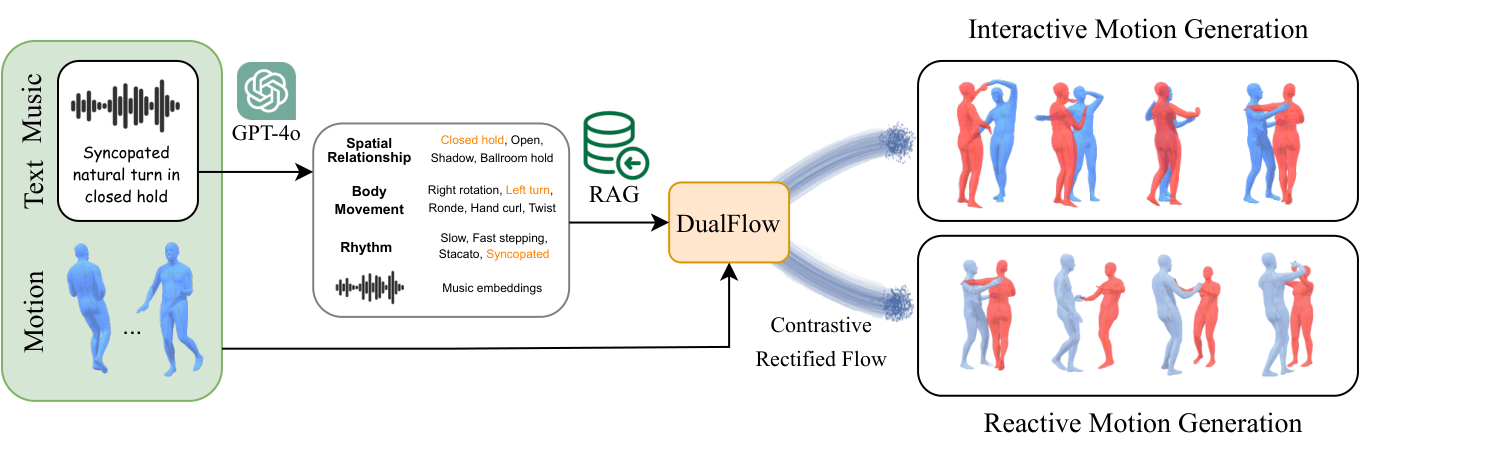

Prerit Gupta, Shourya Verma, Ananth Grama, and Aniket Bera

International Conference on Learning Representations (ICLR), 2026

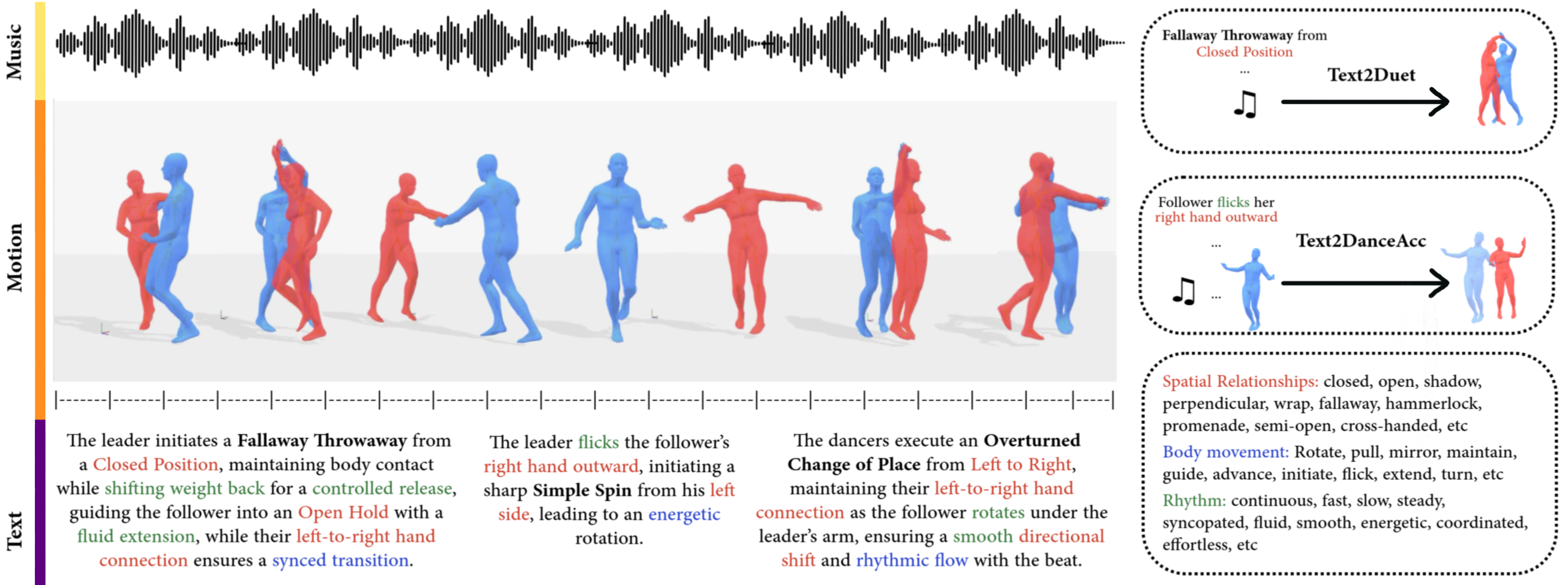

Prerit Gupta, Jason Alexander Fotso-Puepi, Zhengyuan Li, Jay Mehta, and Aniket Bera

IEEE/CVF International Conference on Computer Vision (ICCV), 2025